Llama 3.1, the latest open-source AI model developed by Meta, offers high performance and flexibility in natural language processing (NLP) tasks. This post will explore Llama 3.1’s resource requirements, download process, basic usage, and highlight its unique advantages through comparisons with Claude 3.5 and GPT-4.

What is Llama 3.1?

Llama 3.1 is an autoregressive language model using an optimized transformer architecture, available in two main versions: 8 billion (8B) and 70 billion (70B) parameters. It excels in various NLP tasks, from text generation to question answering.

Key Features

- Open Source: Free to use with no licensing fees, making it accessible to a wide range of users.

- High Performance: Efficiently handles complex NLP tasks.

- Scalability: Designed to run on modern multi-core CPUs and powerful GPUs, suitable for small to large-scale operations.

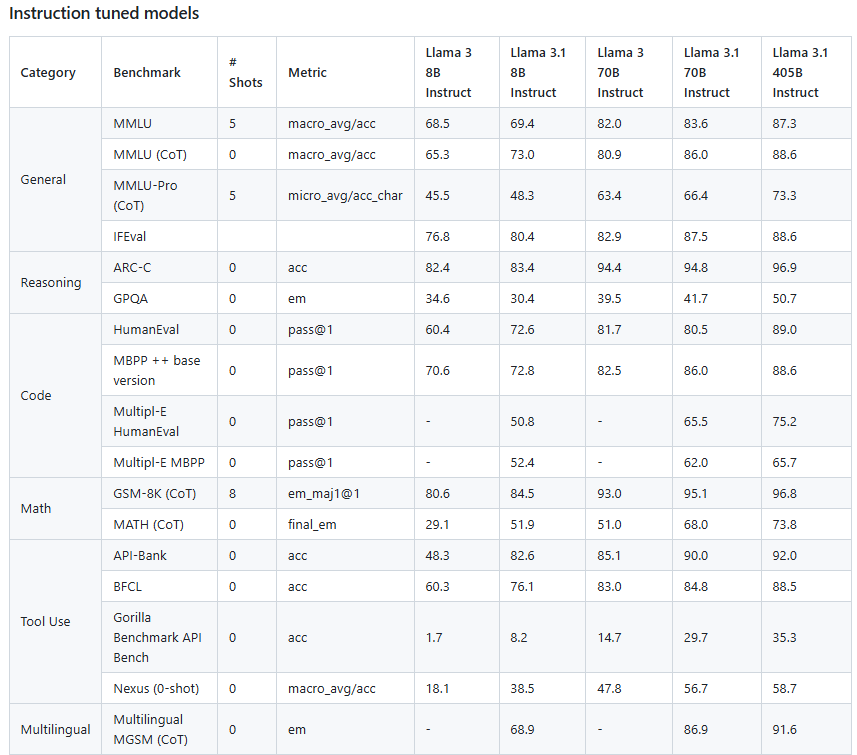

Benchmark performance

The Llama 3.1 405B model shows higher benchmark performance compared to GPT-4. This indicates that OpenAI’s dominance is being challenged, as seen with the release of Claude 3.5 Sonnet, further indicating a shift in the AI landscape.

System Requirements

To run Llama 3.1 8B, 70B effectively, you’ll need:

- CPU: At least 8 cores of a recent CPU.

- GPU:

- 8B model: GPU with 8GB+ VRAM (e.g., NVIDIA RTX 3000 series).

- 70B model: Multiple GPUs with 24GB+ VRAM each (e.g., NVIDIA A100).

- RAM:

- 8B model: Minimum 16GB RAM.

- 70B model: 64GB+ RAM.

- Storage: Several terabytes of SSD storage for large datasets and model files.

Download and Usage

Here’s how to download and set up Llama 3.1:

- Download model weights and tokenizer:

- Visit the Meta Llama website and accept the license terms.

- You’ll receive a signed URL via email upon approval.

2. Installation and setup:

- Run the download script:

./download.sh- Enter the URL from your email to start the download.

3. Install dependencies:

- In a conda environment with PyTorch and CUDA installed, run:

pip install -e .4. Run the model:

- Use this command to run the model locally:

torchrun --nproc_per_node=1 example_chat_completion.py --ckpt_dir Meta-Llama-3-8B-Instruct/ --tokenizer_path tokenizer.model --max_seq_len 512 --max_batch_size 6Basic Usage Example

Here’s a simple example using Llama 3.1 with Hugging Face Transformers:

from transformers import pipeline

import torch

model_id = "meta-llama/Meta-Llama-3-8B-Instruct"

pipeline = pipeline(

"text-generation",

model=model_id,

model_kwargs={"torch_dtype": torch.bfloat16},

device_map="auto",

)

messages = [

{"role": "system", "content": "You are a pirate chatbot who always responds in pirate speak!"},

{"role": "user", "content": "Who are you?"},

]

outputs = pipeline(

messages,

max_new_tokens=256,

eos_token_id=[pipeline.tokenizer.eos_token_id],

do_sample=True,

temperature=0.6,

top_p=0.9,

)

print(outputs[0]["generated_text"])Comparison with Claude 3.5 and GPT-4

Claude 3.5:

- Focus: Safety and ethical AI use.

- Resource Requirements: Accessible through cloud-based API, requiring no special hardware on the user’s end.

- Strengths: Strong in conversational and interactive applications, emphasis on ethical considerations.

GPT-4:

- Focus: Providing top performance across various tasks.

- Resource Requirements: Accessible through OpenAI’s cloud-based API, requiring no special hardware on the user’s end.

- Strengths: Excellent text generation and comprehension, suitable for diverse applications.

- Cost: Charges based on API usage.

Cost-Effectiveness:

- Llama 3.1: No licensing costs, but requires investment in powerful hardware.

- Claude 3.5: May have licensing costs, but potentially lower hardware costs.

- GPT-4: May have licensing costs, but potentially lower hardware costs

Conclusion

Llama 3.1 offers a cost-effective solution for users who can meet its powerful hardware requirements. Its strong performance as an open-source alternative and community support make it an attractive choice for developers and researchers. While Claude 3.5 and GPT-4 also offer impressive capabilities, Llama 3.1’s open-source nature and robust performance are noteworthy.

For more information on Llama 3.1 and how to get started, check out the Meta Llama official GitHub repository.